The testing process is key to delivering quality software. But as the demand for faster delivery increases, it becomes harder for human teams to keep up. Luckily, test automation can help cover tasks.

And DevOps can further help position testing efforts within the software development cycle. But when dealing with larger software products that have constantly evolving functionality, test automation gets trickier.

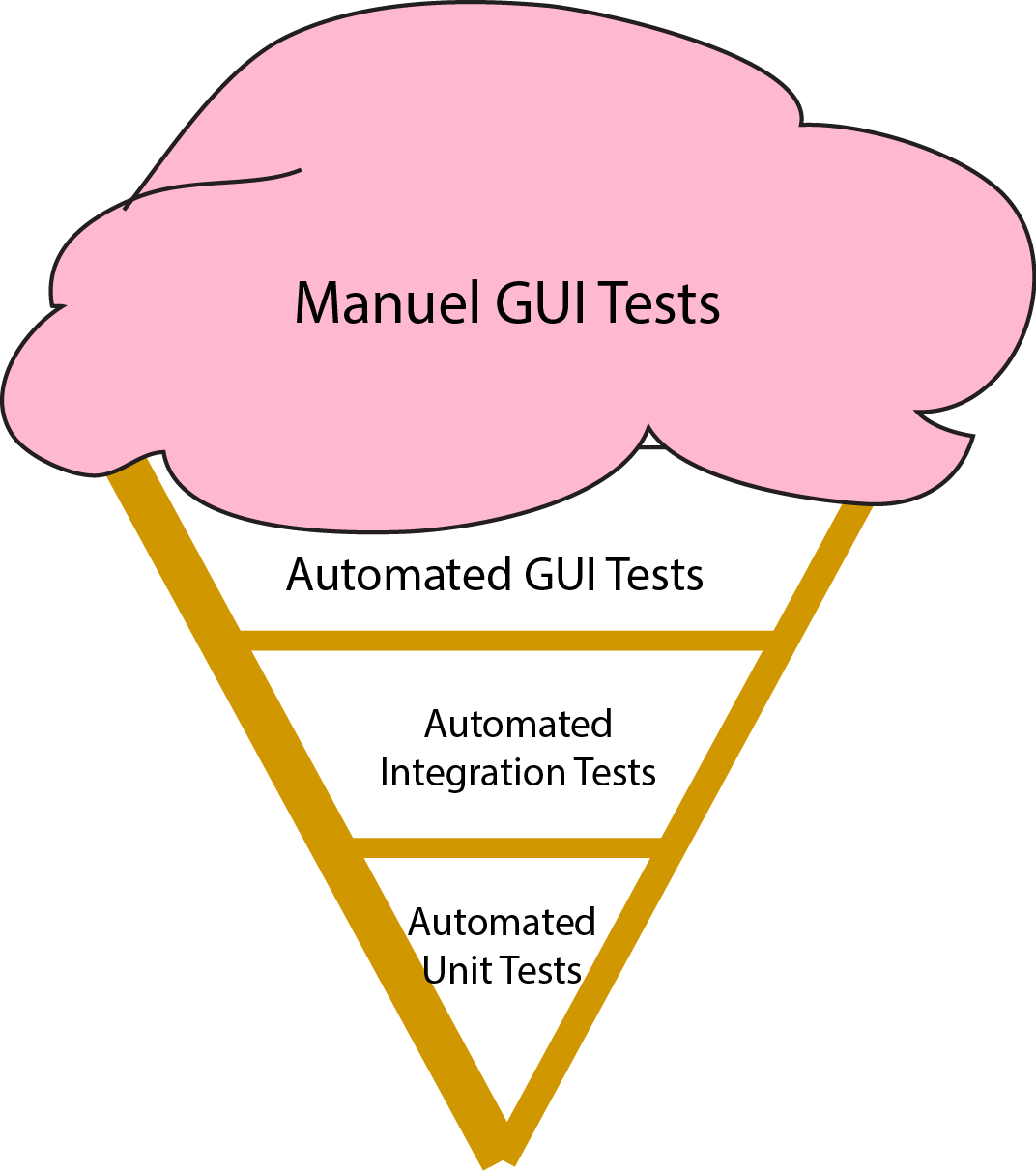

Unfortunately, organizations struggle to balance resources across different testing stages and exercises. One of the negative effects is the ice cream cone. But before we delve into the ice cream cone effect, let’s first understand the anatomy of test automation and its value.

The Value of Test Automation in DevOps

When using a DevOps approach, fostering agility in problem-solving is essential. Often, processes like development and testing may run concurrently, which can be accommodated within the DevOps framework’s continuous testing capability.

One of the major benefits of test automation is that people don’t have to always wait to respond to any issues detected while others test. Feedback cycles can be shortened since bugs and other problems are caught earlier.

Test automation also enables teams to cover more software features and discover issues in a shorter time. Automation allows developers to write more elaborate tests that aren’t constrained by a single human test, making it ideal for larger software projects that have disparate parts.

Test automation can also promote greater collaboration between development and operations teams through improved communication. With test automation tools in place, other departments that need logs and other information on test exercises can find and share it easily and instantly. You can also incorporate other digital transformation tools to improve testing collaboration.

Since you’ll need less people for testing exercises, test automation also saves money. Additionally, when errors are found early, they cost less to fix and don’t massively distort the delivery schedule.

These funds can then be reallocated to other aspects of software production. Teams can have all the right tools available, and any extra hands that can be applied elsewhere is shifted there. You can learn more about how cost-per-defect manifests in a practical context in this Capers Jones breakdown.

Test automation offers better insights and a generally improved analytics experience, extending to memory and file contents, data tables, internal program states, etc. This means you’ll have an easier time figuring out what went wrong rather than burning time getting to the cause of the issue.

Reusability is another valuable characteristic of test automation. You can always apply your test suite to any other use cases and projects that have considerable similarities to those where you originally applied automation.

All-in-all, you get more features, better organization, speed, and accuracy, helping bring products to the market faster with less stress on quality assurance (QA) teams and other personnel.

What Complicates Test Automation in DevOps?

At the onset of developing a software product, an organization may put more of the QA team’s effort into manual definition and execution of tests. But as more features are added, the labor needed for testing increases. And if these features interact with each other a lot, that’s tough luck for you.

Not only will the new features have to be tested for their individual capability, but they’ll also have to be tested in terms of how well they work with others. People will be stretched thin and some software issues could end up falling through the cracks. Then, the software will have to be examined again and everyone falls behind.

As test automation comes to the rescue, it will most likely have some unpleasant surprises down the road too. The volume of tests written will eventually grow, and maintaining the test suite will be more demanding. And while speed and quality can be controlled in these cases, you’re not entirely out of trouble.

With testing partitioned into various exercises, and there being a strict order to follow, this approach will only work well for an entirely new codebase. For the already established larger codebases, the larger part of the labor could remain bogged down with unit tests for example. So yes, you’re using automation, but not necessarily in the best way possible.

While you could be using it to get different things across various exercises moving, you’re instead using it to get only one exercise moving rapidly. Ultimately, you’re still responding to only one specific set of results even if you could be tackling multiple sets.

Potential Patterns for Test Automation in DevOps

Here are some of the patterns that an organization can follow when applying test automation:

Pyramid Testing

When following the testing pyramid, there will be three automated test levels coming in before you get to the manual tests.

Automated Unit Tests: This level would be the starting point at the bottom of the pyramid. It involves a lot of test situations. These would be done separately, which shortens the execution time per block of the application code.

You get to see how the software behaves under different circumstances, try valid and invalid inputs, and also discover any unexpected behavior. Here, you can get a lot of feedback quickly.

Automated Integration Tests: In this next level, you try scenarios that involve different code components. The focus is on how these components work together and whether every call and response is spot on.

You also won’t have to run many test scenarios. At this level, things may move a bit slower since you’re mainly examining the intercommunication aspect. These test scenarios would cover APIs, methods, classes, etc.

Automated UI Tests: This would be the third level, and would also be of an end-to-end nature. You test an application with the integrations running, and emulate real-life user interactions. This level would have tests running much slower since you’re testing more elaborate scenarios from start to end.

You’ll have fewer tests, covering major features, happy paths, and more. You get to discover how several components work together when needed in a typical use case.

The Ice Cream Cone (Anti-Pattern)

In this pattern, the testing pyramid is inverted, so to speak. This means that a lot more of the QA team’s efforts are sucked away from automating unit tests. The same will happen for the other testing levels, though it might be to a lesser extent.

Nevertheless, the result is most of the testing is being done manually. Automated tests will largely be at UI/end-to-end tests level, and to some extent, the integration tests too. The result is a team that is stretched thin with these upper test levels. You’ll have fewer automated unit tests and move much slower in this aspect.

You may also have bugs and other random issues constantly trickling down from the manual tests, but not always being caught on time. In an agile environment, demanding frequent releases, you can’t thoroughly respond with necessary changes when unit tests are limited. You won’t be able to strike a decent balance between delivery speed and remainder flaws on your next release.

The Cupcake (Anti-Pattern)

Here, there’s a team for development, manual testing, and automated testing. They all work separately and each team tries to cover as many scenarios at each test level as possible. The problem is, with minimal communication, a lot of time can be wasted as teams have massive overlap in the scenarios tested.

And while there might be more automated UI and integration tests, unit tests still lag behind and there are more hands on deck for manual testing.

Avoiding the Ice Cream Cone and Improving Testing Patterns

Depending on whether you’re working with a legacy application or starting from scratch, it’s easy to find your organization falling into inefficient testing patterns. And the continuous demand for new features and improvements leaves you with little time to breathe and plan properly.

Here are some best practices for test automation that can help you avoid the ice cream cone and downside of other patterns:

- Encourage developers to adopt test-driven development. New pieces of code should all come with micro-tests, making unit tests more comprehensive. Organizations should invest in suites that can run large amounts of low-level test scenarios.

- Limit the outsourcing of testing. Instead, try to have people that are conversant with the code. Over time, having team-owned tests will enable you to get to problematic areas much faster.

- Set up your plan while considering the amount of additional development that may be needed when solving problems related to different components. This will enable you to leave room for slotting in unit and integration tests on demand.

- Encourage communication between team members running test scenarios at different levels. This way, there are more people aware of any issues discovered, along with the effort required to rectify them. Eventually, the entire team will know the greatest source of problems and determine how much more testing to do in subsequent rounds.

- Always have clear contracts with micro-service providers. This helps you ensure that they are doing things the exact way they should be, and can result in a leaner integration testing exercise.

- Make sure team members understand the value that each test scenario produces for the consumer. By doing so, team members will have a better idea of how many scenarios they need to run at each level and eventually achieve shorter testing times per level.

Conclusion

To get the most out of test automation, there needs to be an emphasis on unit tests. You want to make sure that there are fewer discoveries to make as you go up the levels. Failure to do so will result in more problems not being caught.

Those that are discovered will likely be caught late, not to mention that more time will be spent trying to fully understand them. It also helps to be aware of the scope of integration beforehand. Keep a meticulous record of all present components, and which ones intersect at some point.

This will enable you to go into integration tests knowing exactly what you want to test. You know all the necessary intercommunications and can quickly get down to scenarios that test those calls and responses. The same goes for software use cases. Have a list of the most important purposes of the software so that your end-to-end testing gets straight to the point.

*This is a Guest Post by Author: Gerald Ainomugisha.*

Gerald Ainomugisha is a freelance Content Solutions Provider (CSP) offering both content and copy writing services for businesses of all kinds, especially in the niches of management, marketing and technology.

Søren Pedersen

Co-founder of Buildingbettersoftware and Agile Leadership Coach

Søren Pedersen is a strategic leadership consultant and international speaker. With more than fifteen years of software development experience at LEGO, Bang & Olufsen, and Systematic, Pedersen knows how to help clients meet their digital transformation goals by obtaining organizational efficiency, alignment, and quality assurance across organizational hierarchies and value chains. Using Agile methodologies, he specializes in value stream conversion, leadership coaching, and transformation project analysis and execution. He’s spoken at DevOps London, is a contributor for The DevOps Institute, and is a Certified Scrum Master and Product Owner.

Value Stream Optimization?

We specialize in analysing and optimizing value streams.

0 Comments